Photo by Scott Webb

Thanks to a Seattle Police Department (SPD) detective caught using facial recognition software—though, it turns out, it wasn’t his first surveillance-related reprimand—we wonder what, if anything, would constitute an SPD violation of Seattle’s Surveillance Ordinance since the Office of Police Accountability (OPA) has ruled use of both Clearview AI and drone photos of a personal residence in non-violation of the law. Similar questions arise around King County’s recent ban on facial recognition software.

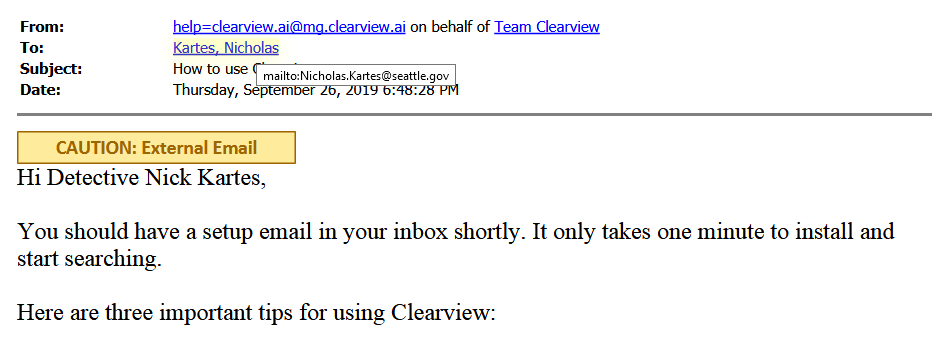

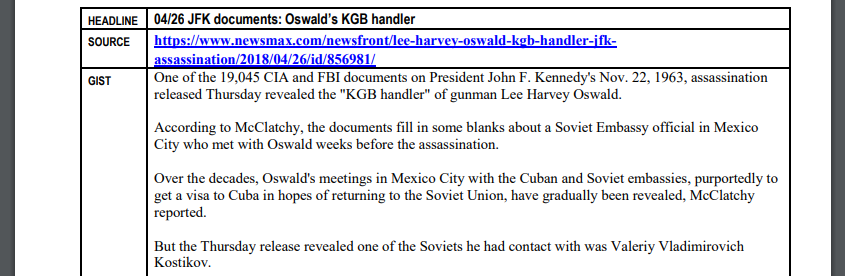

In Closed Case Summary 2020OPA-0731, published on Thursday (July 1), Detective Nicholas Kartes (referred to as “NE#1”) admitted to using Clearview AI approximately 30 times—10 times on SPD cases and 20 times on cases for other departments, but did not keep records or notify his supervisors of his usage. He further claimed to have received zero matches on SPD cases and one match on a case for “a King County agency”, which he allegedly notified of his use of Clearview AI. OPA sustained one allegation of unprofessionalism against Kartes but did not find that he violated the law, specifically the Seattle Surveillance Ordinance.

Bridge Burner Collective, a group of police transparency activists, first uncovered Kartes’ use of Clearview AI in November 2020 when a public records request revealed he had logged into the app over 30 times from a computer on the City of Seattle network.

The detective has received a one day suspension without pay following an Office of Police Accountability (OPA) investigation into his usage of Clearview AI.

Clearview AI is a controversial facial recognition app which matches user uploaded photos to billions of pictures scraped from the internet and social media websites without users’ consent, raising concerns around privacy and accuracy. The company’s founder also has well-documented ties to prominent far-right figures.

The OPA ultimately ruled that Kartes did not violate the law because Clearview AI does not fall under the criteria set forth by the Surveillance Ordinance as “surveillance technology”.

Seattle’s Surveillance Ordinance defines surveillance as “to observe or analyze the movements, behavior, or actions of identifiable individuals in a manner that is reasonably likely to raise concerns about civil liberties, freedom of speech or association, racial equity or social justice.”

The OPA argued that because Clearview AI (and facial recognition technology in general) identifies individuals, rather than tracks movement, behaviors, or actions, it does not fall under this definition. If this is the case, many technologies conventionally considered surveillance, such as SPD’s Automated License Plate Readers (previously reviewed under the Ordinance), would likely also not fall under this criteria for the same reason.

According to City Councilmember Lisa Herbold, however, the Council Central Staff’s analysis is that City departments must submit Privacy and Surveillance Assessments for any new “non-standard technology”, after which a determination about the qualification of that technology would be made. This would mean that, regardless of whether Clearview AI falls into the surveillance criteria, Kartes is in fact in violation of the Surveillance Ordinance because the app was used without first getting approval through this process.

“SMC 14.18.020.B.1 first requires a process for determining whether a specific technology that a department wishes to acquire is surveillance technology. ITD’s process requires City staff to submit a Privacy and Surveillance Assessment (PSA) before new non-standard technology may be acquired. The PSA is used to determine if a given technology meets the City’s definition of “surveillance technology” as defined by the City’s Surveillance Policy. The determination uses specific “exclusion criteria” and “inclusion criteria” in addition to the definition of Surveillance Technology (see below). Unless the facial recognition function is part of a use that is excluded from the surveillance provisions, it appears that facial recognition technology would, at a minimum, meet the “inclusion criteria” of personally identifiable information or “raising reasonable concerns about impacts to civil liberty, freedom of speech or association, racial equity, or social justice.”

Kartes’ use of the app for other police departments also raises questions around the circumstances under which he became involved in these cases. When questioned about the nature of the detective’s involvement in the case that turned up a match, OPA declined to comment and directed us to “obtain more information by filing a Public Disclosure Request”. (SPD has faced criticism due to long response times and its controversial practice of “grouping” requests of prolific requesters. SPD has grouped requests made by Bridge Burner Collective, which delays responses further.)

While the Closed Case Summary does not provide any further details about how Kartes was involved in the investigations, the situation recalls previous reporting on the FITlist. The FITlist was a secretive email listserv where cops from agencies across Washington state (including SPD) sent requests for those with access to facial recognition tools to run searches on their behalf. A thread from the list instructed officers to “not mention FITlist in your reports or search warrant affidavits.”

To his credit, Kartes claims he notified the “King County agency” he submitted the facial recognition match to that the match was found using Clearview AI. However, this behavior raises serious accountability questions regarding facial recognition technologies. With cross-department exchanges of facial recognition results, police departments no longer necessarily own the records of facial recognition searches used in their investigations, complicating efforts for accountability and transparency. Disclosing use of facial recognition software in an investigation would rely on the discretion of the investigating officer. Similarly, if technologies run up against the Surveillance Ordinance or the recent King County ban on facial recognition, police officers could evade regulations by simply asking other departments to run their facial recognition searches.

Interestingly, this is not Detective Kartes’ first run-in with the Surveillance Ordinance. The OPA file mentions another case report, 2020OPA-0305, in which Detective Kartes (referred to in the document as “NE#2”) used a personal drone to take pictures of a residence. In this investigation, OPA declared that while the detective “was in non-compliance with the [Surveillance Ordinance] law”, it chose not to sustain the allegation because “OPA does not know how many other officers in the Department are also unaware of or, potentially, do not understand this law and all of its requirements and parameters.” If the roles were changed, would SPD exonerate a civilian for not being aware of or understanding a law?

With little to no consequences for misconduct as criticism mounts against the OPA, it can hardly be surprising that officers like Detective Kartes are repeatedly caught flaunting laws and regulations.